Identity risk has long been a vulnerability for digital commerce. No matter how many technological innovations and advancements there are on the fraud-fighting side, the central question of who the person is behind the keyboard and whether their intent is good remains a significant challenge.

Agentic AI adds an extra layer of complexity to an already intricate fraud landscape. It inserts yet another degree of separation between the user and the action on a site or app — meaning it’s no longer just about knowing who’s behind the keyboard, but who’s behind the bot.

And this isn’t a theoretical risk. We’ve already seen an 18,510% day-over-day spike in agent traffic across Forter’s network following OpenAI’s announcement of ChatGPT agents. That’s not a minor uptick — it’s a seismic shift. And it proves just how fast these technologies are being adopted, not just by legitimate users but by fraudsters eager to exploit them.

The Challenge is Already Starting

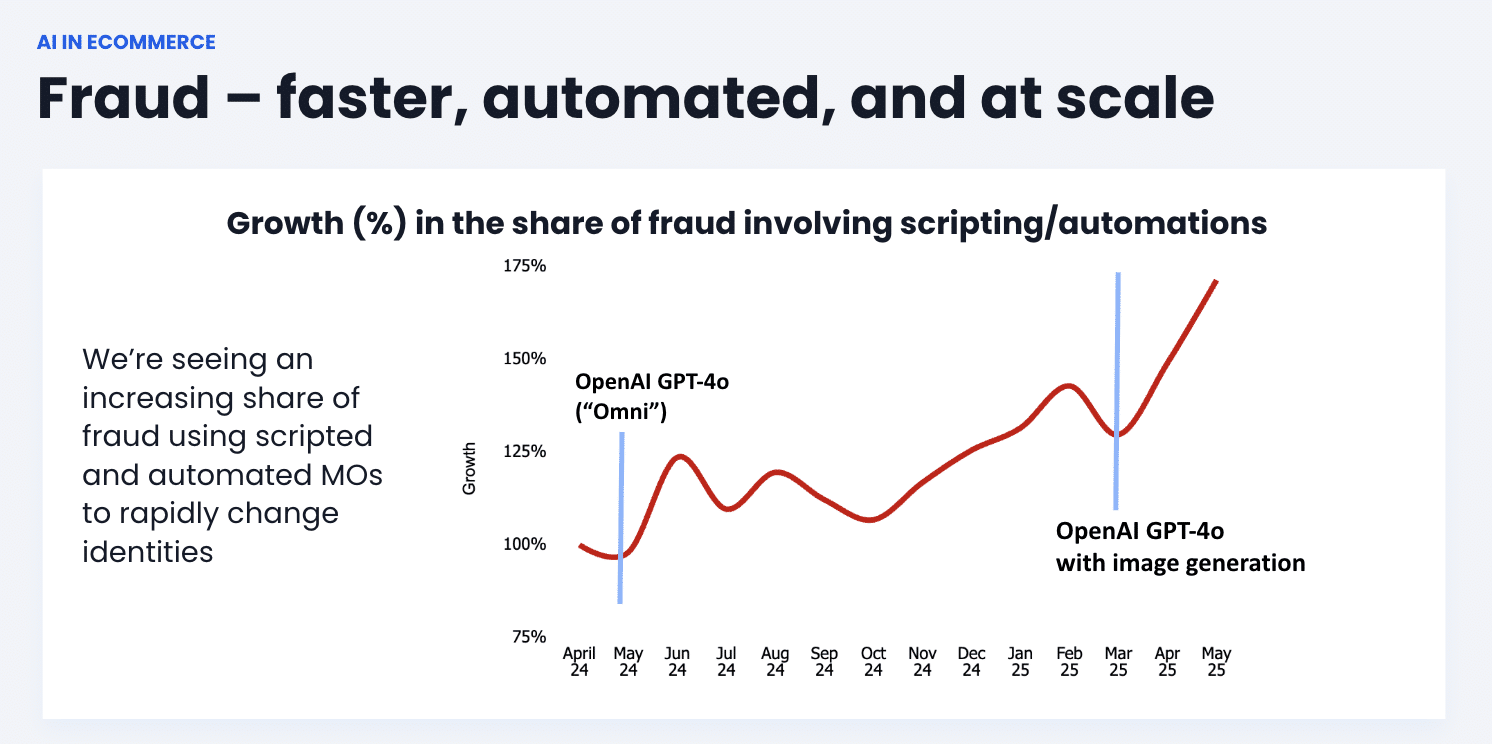

Like with any new invention, fraudsters look for the loopholes. This time, they can work at AI-powered speed and scale. They already are, as you can see in this graph:

As generative AI becomes easier to use, more effective, and more ubiquitous, more and more fraud includes the use of scripting or automations. When it can improve your crime scale and increase your success, why wouldn’t you use it?

So this isn’t a problem companies can put off for a year or so. Instead, it’s vital to consider some of the possible directions this could take now and put measures in place to protect your business.

Loyalty and Promo Abuse

Fraudsters have numerous ways of abusing loyalty programs,from using them to obtain free gifts to taking them over and exploiting them as an unprotected source of cash. Promo abuse is, if anything, even more rampant, from fraudsters and regular customers cheating the system to get more than they’re entitled to.

AI agents take this to a whole new level. Where once you would have needed to focus on a chat to convince a customer rep to give you a discount, refund, or benefit you shouldn’t be receiving, now you can have the agent run through the script for you.

Finding coupon codes, offers, and loopholes to enable stacking and other cheats is fast and easy when an agent can constantly trawl the web for them, and then alert you when they find them. Repeating a trick is simple when the agent can assist with it.

Bad behavior, such as faking photos of damage to goods, can also be handled and scaled by agentic AI. As you can see from the graph above, this is a use case that resonated hard and fast. None of these threats are new, but with agentic AI in the picture, they can no longer be ignored.

Synthetic Agents

A synthetic agent is when a fraudster creates an entirely fake agent account using a stolen identity/card data, programmed to transact, mimicking a legitimate customer with alarming accuracy. It’s like a fraudster with a fake or guest account on your site, but on a larger scale.

Currently, this is a challenge with limited impact, as few agents are programmed to execute the actual transaction on someone’s behalf. The more common behavior in the early days with this technology is for the agent to take all the steps up to the buy button, but then let the human step back in to check they’re happy and click.

It’s clear from the way brands discuss this technology, though, that this is a probable direction for agentic AI. Once that’s in play, the sky’s the limit from the synthetic agent side.

Rogue Agents

Right now, six months in — and it’s crazy to me to think how fast this is moving — the major players in the agentic AI field dominate its interactions with digital commerce. OpenAI’s Operator program launched in collaboration with a range of sites, from travel to food delivery and more.

As the field expands, however, it’s probable that sites will want to make space for smaller players and independent operators, following the pattern from other kinds of technological innovations. If that happens, then rogue agents could come into play.

A rogue agent is one that custom or unregulated agent platforms mimic human users through standard web flows, thereby bypassing bot defenses and behavioral checks. I haven’t seen this yet in the wild, but if it happens, it’ll be basically like a fraudster bot replacing a fraudster. Fraud at scale.

Agent Takeover

Agent takeover is the agentic AI equivalent of account takeover. A criminal could take over an OpenAI account, an Anthropic account, etc., and then hijack it in the same way that we’re accustomed to seeing them misuse stolen accounts today.

This would be particularly difficult to detect, much like remote desktop activity, because it would involve the right agent performing normal-looking actions. It’s just that they wouldn’t be things authorized by the real user.

Identity and Agentic AI

What all these threats have in common is that they’re not inherently new types of attacks. They’re adapting known kinds of dangers to take advantage of the opportunity presented by agentic AI.

On the one hand, that’s alarming because it means that attacks we know and hate have the potential to escalate to an entirely new level of speed and scale. On the other hand, that also shows us a clear path forward. The approach that is effective against the more traditional methods of applying these attacks is the same one needed against agentic AI threats as well.

You still need to answer the question of who’s behind the actions. It’s still all about identity. Now there’s another layer between you and the user, but that’s a stimulating challenge, not a disaster. I admit, I’m even excited by it.